Story

Football has a rich tradition of viewership both in stadiums and at home. Fans flock to stadiums to experience the electrifying atmosphere, the roar of the crowd, and the thrill of watching their favorite teams live. At home, viewers gather with family and friends, often creating a festive environment with snacks and drinks, making it a communal experience. To explore how small additional experiences can enhance engagement with the team and the game, we developed an augmented reality (AR) experience. This AR experience shows the line-up of PSV in highly realistic visual videlity alongside details on the upcoming game and statistics of the players. Accompanying research aims to determine if these enhancements can increase viewer engagement and enjoyment, whether they are watching from the stands or the comfort of their homes.

Process

4DR Studios and PSV have an established collaboration to create photorealistic digital representations of the players in the team. Previous collaboration between 4DR Studios and BUas showed the opportunity to mix these digital representations with immersive media development & research. These connections lead to a collaboration where the three companies identified the needs and created the design that lead to the creation of the application used for the PSV AR project.

Project Setup & Topic Research

From the start of the project we intended to show AR content in both the stadium as well as a simulated home environment. While the home environment was a known setting, the stadium required some in-person research by the team to understand how these matches are organized, and where AR content could be deployed.

After this in-person experience we looked for existing applications that use immersive AR content inside of stadiums, to identify how the industry and academics are currently tacking this topic. We hereby found that there are companies that show phone-based AR content in stadiums in America and some research projects on the technical feasibility when using glasses. However, no clear information could be found on the experience of these applications.

Technical and Content Decision Making

Facilitating a group of simultaneous users meant that needed a sizeable amount of AR headsets. To facilitate in this need Effenaar Smart Venues lended us 20 Xreal Light glasses. These allow the user to view digital images superimposed over the real world through a glass-lens, these devices also offer basic image tracking, as well as position tracking and an SDK for use inside the Unity3D Game Engine.

We tested the optics on these glasses when visiting PSV during match. During this match we focused on the usability of the glasses, as well as the type of content most suitable for the application.

Topic research showed that mid-match content can be distracting for the viewer. Our limited timeline also made it difficult to create a polished application that can be used in a truly live context. We therefore decided to develop an application to be used before the start of a match. Users can see statistics on the match, their favorite players, and see large-scale 3D representations of these players.

Application Development

Volumetric Captures

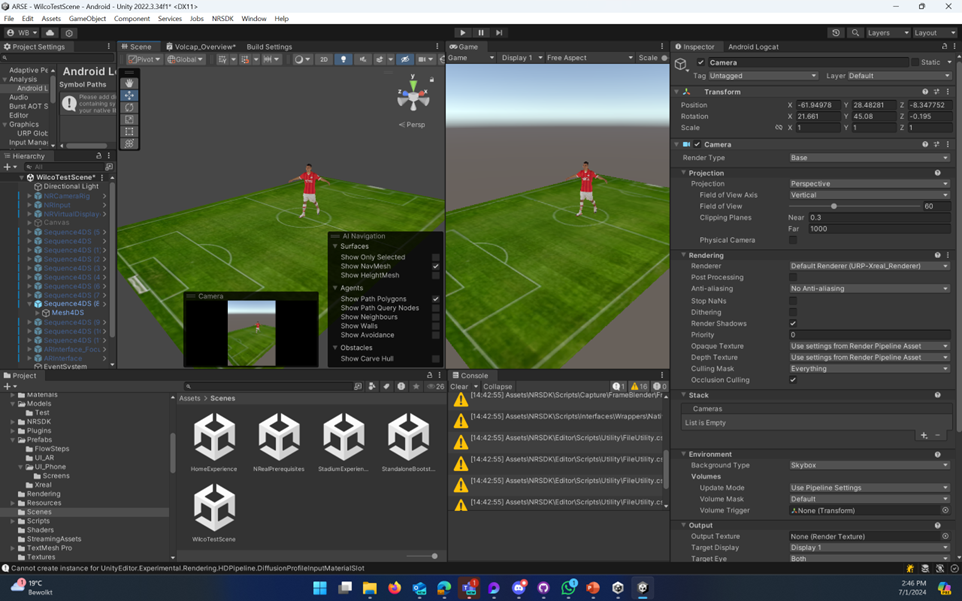

The first challenge we faced was to visualize volumetric data of 11 players simultaneously. This required us to manage how files were played to retain a high level of performance on the glasses. We thereby had to figure out how to consistently place players on a sensible location on the field, while being able to view all of the players simultaneously.

Interface

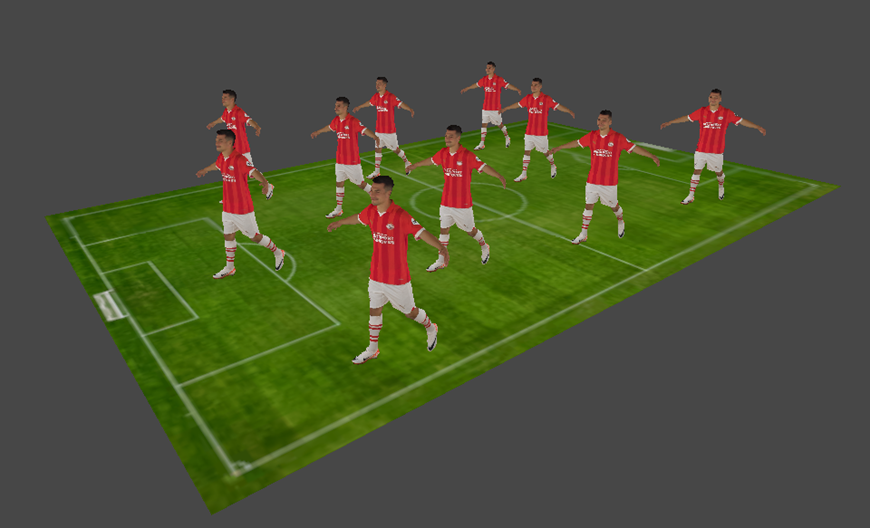

The application required 2 separate interfaces for the users. The first interface was the 2D phone interface used to control the application. Within this interface the researcher would select the location and position to determine which version of the app would open. The main interaction of the user consisted of selecting a specific player to highlight, and in the stadium experience to swap between a Team-Overview, and a Player-Overview mode.

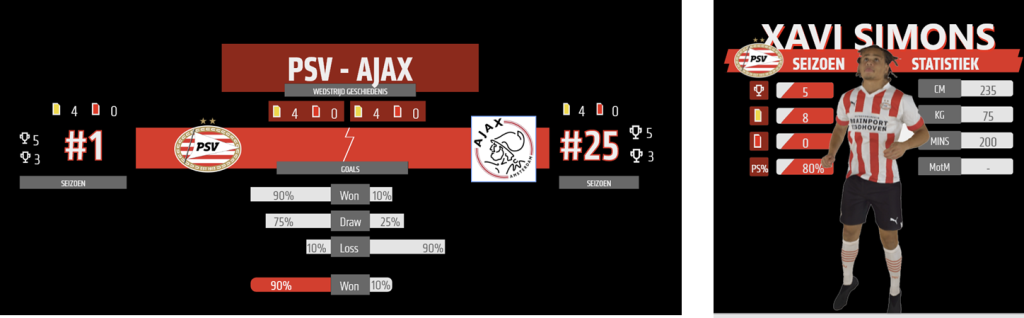

The 3D or AR interface was designed to show an extensive amount of information about the upcoming match. Here the users could see statistics about the historical head-to-head of the teams as well as their current standings. The player-overview would show generic information about the player as well as seasonal statistics.

Spatial Placement

The last major development step was spatial placement of content. We had experience with manual, automated, and image-assistend spatial localization, and used this to create 2 separate placement methods:

- At-home placement using an image, this image was placed on the table to provide a consistent anchor for all users

- In-stadium placement by selecting the seat & asking users to look straight at the center of the pitch, a simple interaction with results accurate enough for this study

More information on placement options can be found in one of our gems.

Final Application

Data Collection

Evaluation of the application, and its impact on the experience of watching a football match, took place during 6 matches in the 2023/2024 seasons. During each match our participants would be spread out over 4 groups, watching the game in a simulated home setting or in the stadium, and with or without the ar application.

We hereby used a series of research tools to measure the experience and use of the application by our participants. A Pre & Post Experience Questionnaire were used to identify the users previous experience, viewing behavior, and emotional state. Physiological Data Tracking with Empatica E4 & Shimmer GSR+ was conducted to measure the emotional arousal levels of the participants through skin conductance. And last of all In-App Data was gathered to understand how long the using was using the AR application, and what the user was looking at.

Results

The final results consist of a compound application which can work for both Home and Stadium experience. This allowed the team to gather data via one streamlined workflow while minimizing the development efforts.

Application Home

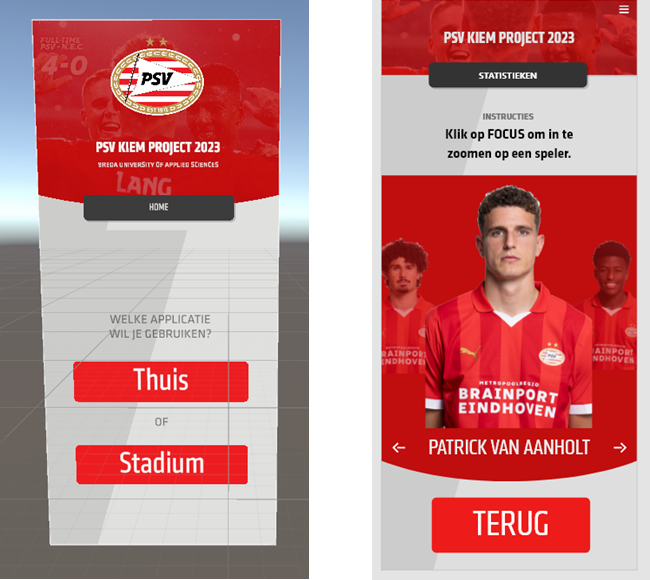

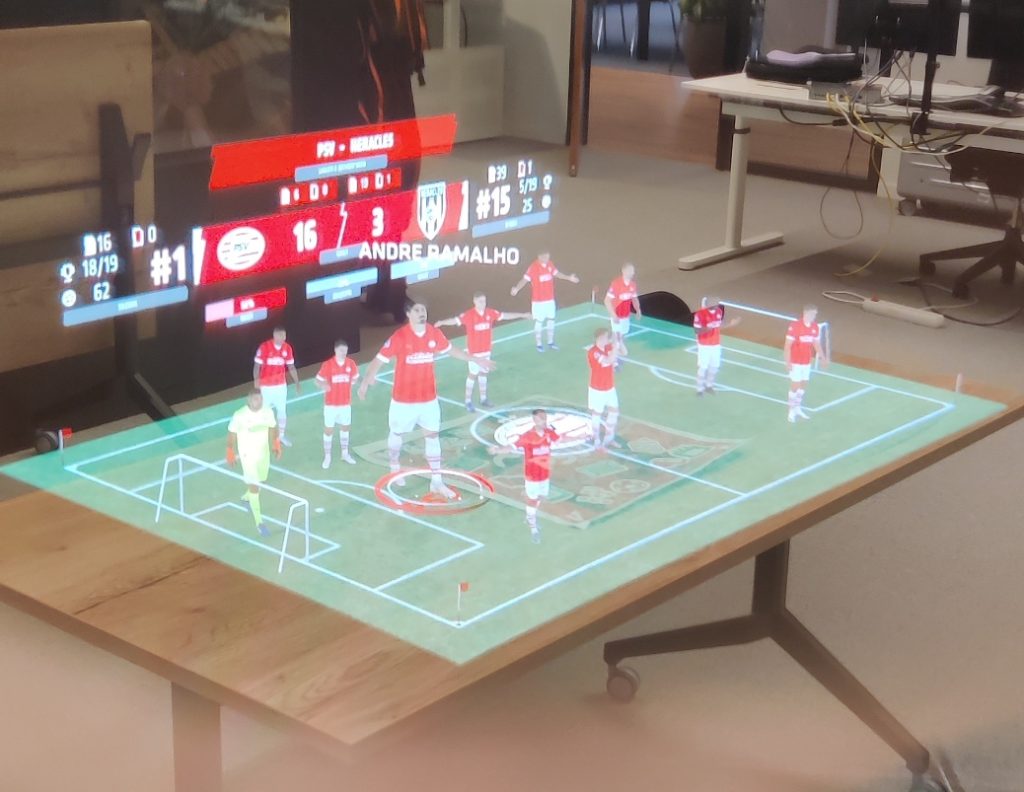

The home application displays an overview of the match and all involved players on top of a table.

A life-size animation of the player is placed near this table.

Application Stadium

The stadium application displayed similar information, but placed this in the center of the field.

The players are scaled up to ~10 times their size.

Media

Technical Details

Hardware

Xreal Light as the AR HMD. A lightweight, easy to use display for spatial experiences. This device can measure both position and rotation to retain the location of a digital object inside a physical space

Software

Research Output

The project is currently in its writing and publication phase, further info on the research output will be provided at a later point.