Introduction

Augmented Reality contains of a vast variety of content which can be displayed through a phone, monitor, or head mounted display. For this gem we will focus on the latter, and the available options to consistently place content within a physical space.

Traditional AR glasses or viewers provided plain text and image-based content to the user, sometimes showing a video or mirror of a PC screen. This type of AR content is well known from the original Google Glass, and is currently being used by many devices, including the Xreal Air, as a large-screen display on the go.

These glasses are meant to replace the role of a traditional screen, showing the information in a more integrated manner, or at a scale which is not easily possible on the go. It is however not needed to place this content in the real world, instead we want the content to stay within the user space, often even in front of the users eyes no matter where they look.

While this content is perfectly suitable for day-to-day activities, embedded AR experiences rely on content which is placed in a fixed location in the real world. This is seen in applications such as Pokemon Go, where you can walk around the pokemon you are trying to catch, but also in indoor experiences such as Cradle’s own AR enriched dance experience. In these situations, we want the content to remain in the original location in the physical world, even if you walk around the location yourself.

Placing Content

Placing content in any physical surrounding is typically done using a method called SLAM (Simultaneous Localization And Mapping). This method uses one or multiple cameras to create a 3D representation of your surroundings while you are using the AR device. The device then uses the current camera view to determine where you are within this scanned environment. While this method is great for single user experiences, as it can place content in almost any location, it needs more information to place the same object in the same location for multiple users. Multi-user experiences such as the AR enriched dance experience can therefore implement (amongst others) one of the following methods to align content for all users:

- GPS: Using the latitude and longitude to place objects inside the real world

- Manual Placement: Asking the user to manually place the content with a controller or hand gesture

- VPS: Creating a 3D scan of the environment, and using this scan to place content in a predetermined location

- Image and Object Tracking: Asking the user to scan a code, image, or physical object and placing content at the same physical location

GPS

GPS data uses satellites to measure the position of a GPS device, such as a smartphone or satnav, almost anywhere on earth (GPS.gov). This technology can determine the position of the user with an accuracy of up to 4.9 meters. Knowing a user’s approximate position in the world can help the developer place content in a roughly estimated position, and is often used by GPS based AR games where an event is triggered if the user is near a location in the physical world. This is well demonstrated by Instagram and Snapchat, which use this GPS data to show filters for a specific company or event in that physical location. While the technology is great for roughly estimating where the user is or where content should be placed, it is not able to place the same content in exactly the same physical location for all users. The accuracy of GPS data is even further reduced when we move indoors, making it unusable for our stage based AR performance.

Manual Placement

Another option is that the user determines the location of the content themselves, by manually identifying the start position and rotation of the content. This is often used in single-user experiences such as marketing apps, where 3D models of furniture can be placed in your own living room. This method allows for a lot of freedom, and can be used in nearly any situation as long as the earlier described SLAM system works. While the method is easy to use, it lacks some accuracy, relying on the user to place the content in exactly the right location. The lack of accuracy is usually acceptable for individual experiences. However, multi-user experiences, especially where physical and digital content is mixed (such as dancers on a stage), require content to be placed in the exact same location for all users. We therefore decided that this method was also not suitable for the AR enriched dance experience.

VPS

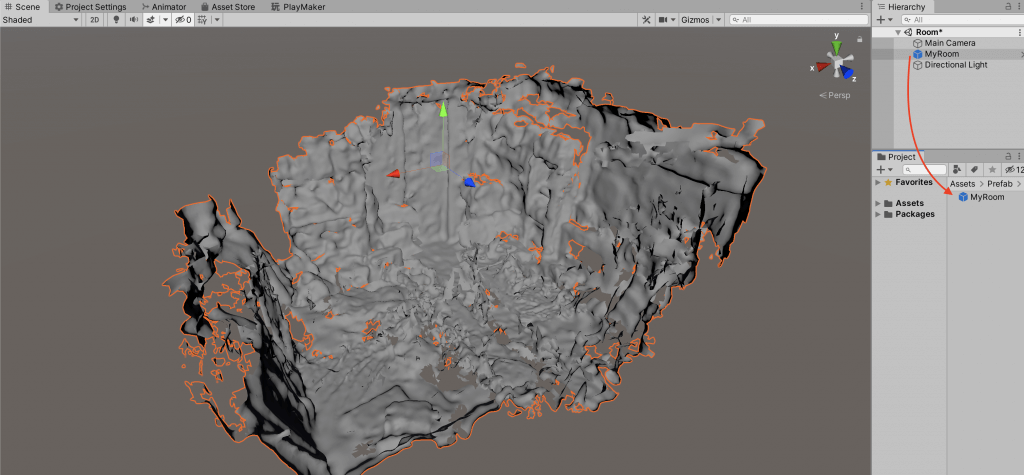

After eliminating both GPS and Manual Placement we decided to look into VPS systems, a method which uses pre-scanned environments to identify the users exact location. Various companies (including Immersal, Niantic, and Google) offer solutions to scan your own environment or use pre-existing environments. This 3D scan can be loaded into software such as Unity to determine exactly where the digital content should be placed. The application stores the scan, as well as the desired location of the digital content, to align the digital content with the physical location. This method is highly accurate as long as a scan can be recognized by the VPS system, and used in shared spaces such as cinemas, or public squares.

Our project had a fixed location, and devices which were capable of running VPS system, a seemingly winning combination. However, we soon discovered a problem with using a VPS system, specifically when scanning the exact location we were going to use. Pop podiums are often large black halls with very little discernable shapes or colors, to focus the attention on the stage / podium and the artist on the podium. A VPS scan is similar to a photogrammetry scan, and requires enough distinct visual features to create a 3D scan, something not present in a pop-podium hall, and something we could not change. Unfortunately this made that this method was not suitable either.

Image and Object Tracking

After eliminating all of the previous suggestions we decided to try image tracking, a simple and well established solution available for nearly all AR system. Image tracking system allow a developer to create a library of images, after which the AR system can check the available camera feed to determine if the chosen image can be seen by the AR device. The system then provides the location of the image in the physical world. We decided to place a physical cutout of this image at a fixed location in front of the stage, and ask the participants to look at the image while wearing the AR headset. We could then determine where the stage was as long as the image was placed in the same spot during every performance.

While this solution was not the most glamorous, it supported the fine balance between usability and accuracy which we needed for this project. Most of our participants were entirely novel to the use of AR HMDs, and were able to scan the image without any help. This method was also accurate enough that we could consistently place the digital content on top of the stage.

The future of localization

All of the described methods have been, and are actively being used in AR development as we speak. The release of new technology, including the Quest 3, Apple Vision Pro, and upcoming AR Glasses show how the industry is actively innovating, and searching for ways to improve both single user as well as shared experiences. This is also visible in the innovations we see amongst localization methods, where new options are invented, mixed, and updated to further both the usability and accuracy when placing content inside a shared physical environment. GPS and VPS are already being combined by companies such as Niantic and Google, to create global applications with minimal performance impact, checking only the VPS scans near the user’s position. Research into shared SLAM systems create opportunities where users can jump in and out of sessions where content can be placed based on the same reference environment, with similar ideas being used in the Meta Quest, allowing users to share details about their physical space using Shared Anchors. It is clear that the industry is not done defining how content can be shared amonst multiple users, creating a dynamic industry which is constantly striving to create the best product in any situation.

Conclusion

Current advancements in the use of AR technology see an increasing need for spatially persistent 3D content in multi-user experiences. Various methods, including GPS, Manual Placement, Visual Positioning Systems (VPS), and Image and Object Tracking, were examined for our project the AR Enriched Dance Performance. Iterating over these methods found that GPS and Manual placement were not accurate enough for the experience we wanted to offer. While VPS was highly promising, technical limitations forced us to leave this technology for a future project. Image tracking hereby emerged as the most practical solution for consistent content alignment with a balance between usability and accuracy. Overall, the chosen solution proves pragmatic, aligning with project requirements and user context. We do however see major advancements within the field of shared spaces, and are highly entheusiastic about the possibilities we will encounter during our next projects.