Virtual Humans for Emotional Social Prosperity in the European Region (VHESPER) explores how highly realistic, interactive digital agents, Virtual Humans, can act as an emerging technology with real societal impact. The project builds and connects European research networks to investigate empathic interactions between real and virtual humans and to define a multidisciplinary R&D agenda. VHESPER aims to strengthen Europe’s knowledge base and prepare collaborative frameworks for future research on Virtual Humans.

Story

VHESPER began with an unusual question that reshaped how BUas approaches virtual human development: how do you study crying when reliable, authentic visual stimuli scarcely exist? When Professor Ad Vingerhoets, emeritus professor and expert in the psychology of tears, approached the Cradle research team, he revealed a critical gap in emotional research. Genuine, natural crying is challenging to capture on camera, meaning researchers worldwide lack reliable stimulus material for studying profound human emotions.

VHESPER addresses this challenge by exploring how virtual humans can express subtle, deeply human emotional cues – from micro-expressions to flowing tears. Through motion-capture sessions, emotional acting studies, and FACS-based modelling, the team builds expressive virtual humans that push beyond basic cartoon-like emotions.

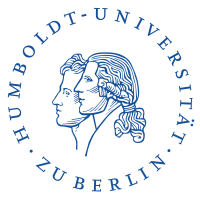

One milestone is the development of a sophisticated physics-based virtual tears system, which includes tear film overflow effects, gravity-based movement, and even subtle details like the wet trail tears leave behind. These new developments have been used in experimental studies investigating how people perceive and respond to six different virtual humans displaying various emotions.

This work is part of a broader European collaboration uniting Cradle BUas, Humboldt University of Berlin (DE), the University of Bremen (DE) and Howest University of Applied Sciences (BE) – blending hyperrealistic virtual human technology with cognitive science and psychological research. The combination of scientific depth, societal relevance and European collaboration is what defines VHESPER.

Results

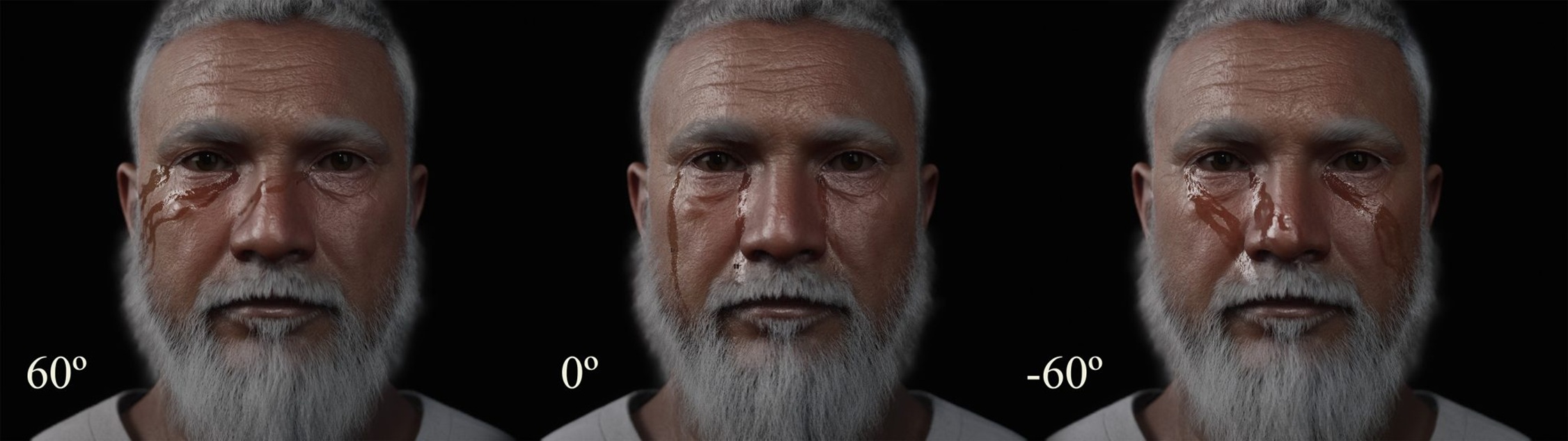

VHESPER developed a study design to compare static versus dynamic stimuli of expressive VHs, replicating the work of Gračanin et al. (2023). An initial experiment with 57 participants led to the development of a new FACS-based stimuli set, which is currently being tested with approximately 160 participants. Preliminary results have been published (van Apeldoorn et al., 2025).

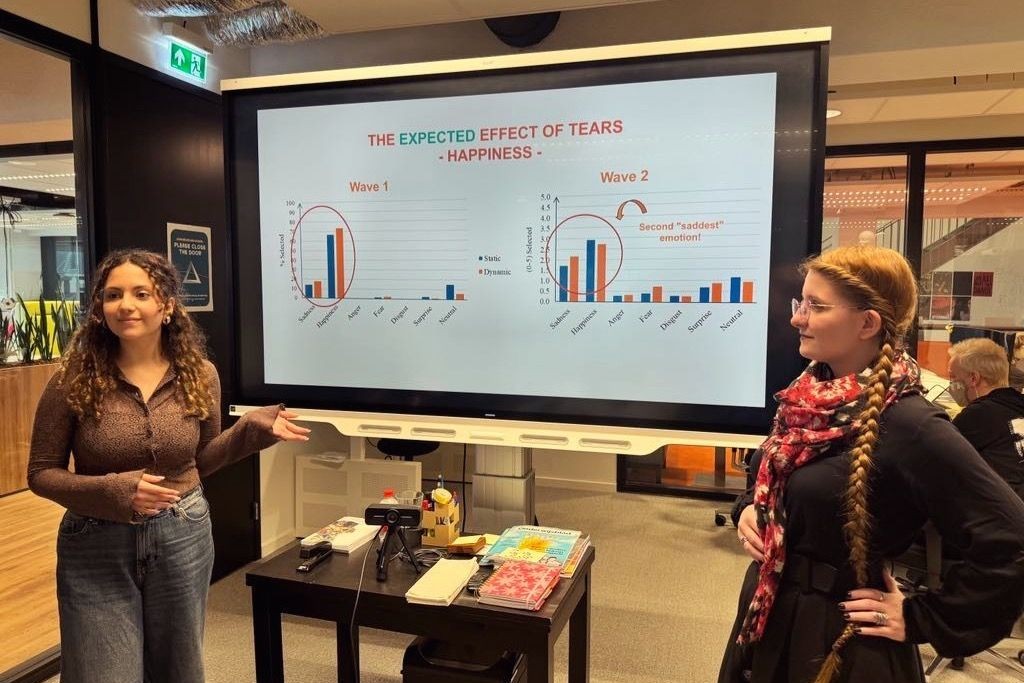

A distinctive feature of VHESPER is its focus on student-led innovation. Seven master’s students, several graduating with high distinction, contributed to experiment development, data analysis, and prototyping of expressive systems. Partners played a central role in designing experiments, guiding methodology, and safeguarding scientific standards, while evaluating each iteration of VH stimuli, contributing to the FACS dataset, and improving the analysis pipeline, including mimicry analysis.

Research Output

The first experiment tested whether real humans (RH) can attribute profound emotions to virtual humans (VHs). In a behavioural study (N=56), participants viewed four VHs (two male, two female) displaying seven emotional states (neutral, happiness, sadness, anger, fear, disgust, surprise) via 6-second photos or videos, with all avatars showing tears. The study compared dynamic versus static virtual tears to examine emotion recognition, contagion, mimicry, and perceived intensity.

Key findings:

- Dynamic tears made VHs appear significantly sadder than static ones (p < .001; +0.49 on a 0–5 scale), except for sadness (ceiling effect) and surprise (often interpreted as happiness).

- Significant intensity differences were found for Sadness (M = 4.11) and Neutral (M = 2.96).

- Authenticity ratings did not differ (p = .591).

- Emotional contagion varied: happiness was most contagious, anger least, supporting previous findings that positive emotions spread more easily.

Publications:

Ongoing research:

- Delphi Study on Emerging Trends and Implications of Human–Virtual Human Interaction

- FACS-based expressive agents