Story

Alcohol abuse is a worldwide problem being addressed from various angles, in NL alone we see regular over-use by over 950.000 people. To assist in the rehabilitation, virtual reality applications are being used for exposure therapy in safe environments, where the temptation can be triggered, but no actual alcohol be involved. The ARET project explores a first step in this form of therapy using Augmented Reality technology.

Alcohol abuse is a worldwide problem being addressed from various angles, in NL alone we see regular over-use by over 950.000 people. To assist in the rehabilitation, virtual reality applications are being used for exposure therapy in safe environments, where the temptation can be triggered, but no actual alcohol be involved. The ARET project explores a first step in this form of therapy using Augmented Reality technology.

Process

Our aim was to develop a prototype application which could facilitate the following roles:

- Get different digital characters to offer a drink to the user

- Get the user to ask for, as well as accept, the drink being offered

- Allow the therapist to setup the app in the least amount of time possible

- Use superimposed AR glasses to show the digital content and the real world simultaneously

This project was thereby used within the development team to explore various interactions methods, as well as spatial positioning methods, to create an application which was easy to use, required little to no setup, and asked active participation from the user.

The characters offering the drink was the first challenge we tackled. Previous projects for VRET already required cartoon style characters, which we could re-use for ARET. The realistic characters were developed with the help of our partners at 4DR Studios in Eindhoven. These 4D video files (volcaps) were enhanced with tracking points in the hand and head position of the characters. The animated cartoon characters, as well as the volcap files were at this point ready to place in Unity, where we could show the required model, and place a drink in their hand using the added tracking points.

Previous VRET interactions focused on point & click actions, where the user used a controller to aim at a 2D interface, selecting their option. For ARET we wished to explore more immersive interactions, which also forced the user to actively participate in when choosing a drink. We therefore separated the interaction into a selection and an acceptance action. For the acceptance we immediately went for a hand-tracking solution, asking the user to touch / grab the drink of their choice. Selecting was slightly more problematic, initially we considered using physical menus, a digital menu with a pointer, and swiping actions to cycle a range of possible options. Limitations in the hardware, and availability of accessible SDKs in the end brought us to voice recognition. We hereby used the native Android voice library for continuous voice recognition, where a dictionary of drinks was used to check the voice output against the available choices.

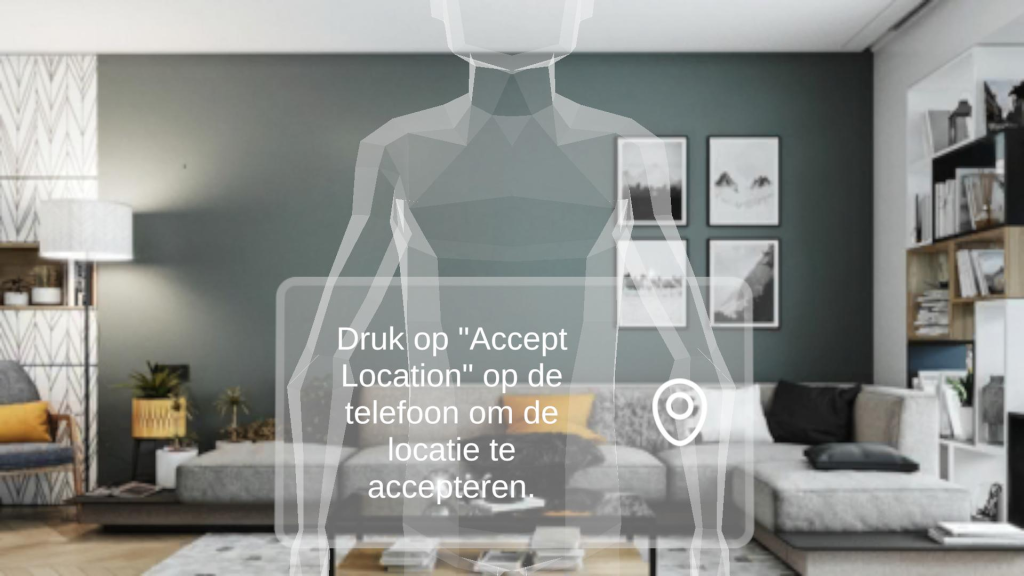

Spatial positioning is a topic which arises again with every project. Previous applications explored fixed anchors, scannable images, and manual placement using the location of the glasses. One of the requirements of the ARET project was that the glasses had to be taken anywhere, and could place the character in any location. To support this we resulted to using the glasses their Spatial Meshing option, creating a 3D model of the physical surrounding. A ray cast would then decide whether the space in front of the user was freely available, or if the space is obstructed. The user wearing the glasses can see a faint character standing at a predetermined distance if that position is free, and press a button on the phone to accept the location. This interactions was extremely simple to explain, and allows spatial positioning in nearly any situation.

The interface was the final challenge we tackled when developing the ARET application. The application required 2 types of interfaces:

The Phone Interface became a simple multi-select for the therapist to determine the character as well as the consumption offered. The therapist could also determine the distance of the character, where 250cm was decided as the default option providing the best view. The remaining interface consisted of 3 buttons: to start the session, to accept the location of the previewed character, and to go back to the main menu after the session was over.

The AR Interface was necessary for 3 moments: to tell the user how to place the character, to show the available options and explain the user how to select these, and to thank the user for their participation. The basic design of these interface windows were developed as a simple grey box, with a grow/shrink animation when placed or removed. This box had a simple grey outline, and has been designed to align with a similar aesthetic as the new Apple Vision interface. The use of text and icons creates a simple, yet understandable interface for all users.

Results

Project ongoing

Technical Details

Device: Xreal Light, SDK 2.1.0

Engine: Unity3D 2022.3.7f1

Voice Recognition: EricBatlle/UnityAndroidSpeechRecognizer

VolCap Plugin: 4Dviews – Volumetric video capture technology

Characters: Volumetric Capture, Low Poly Cartoon

Research Output

Research Ongoing