Why we needed to move to a self-hosted infrastructure

When working on a large project, requiring numerous developers it is quite important to be able to track the new changes introduced in every new build.

Locally generating a new build for each change might be quite time-wasting for development, especially if the build time is quite extended.

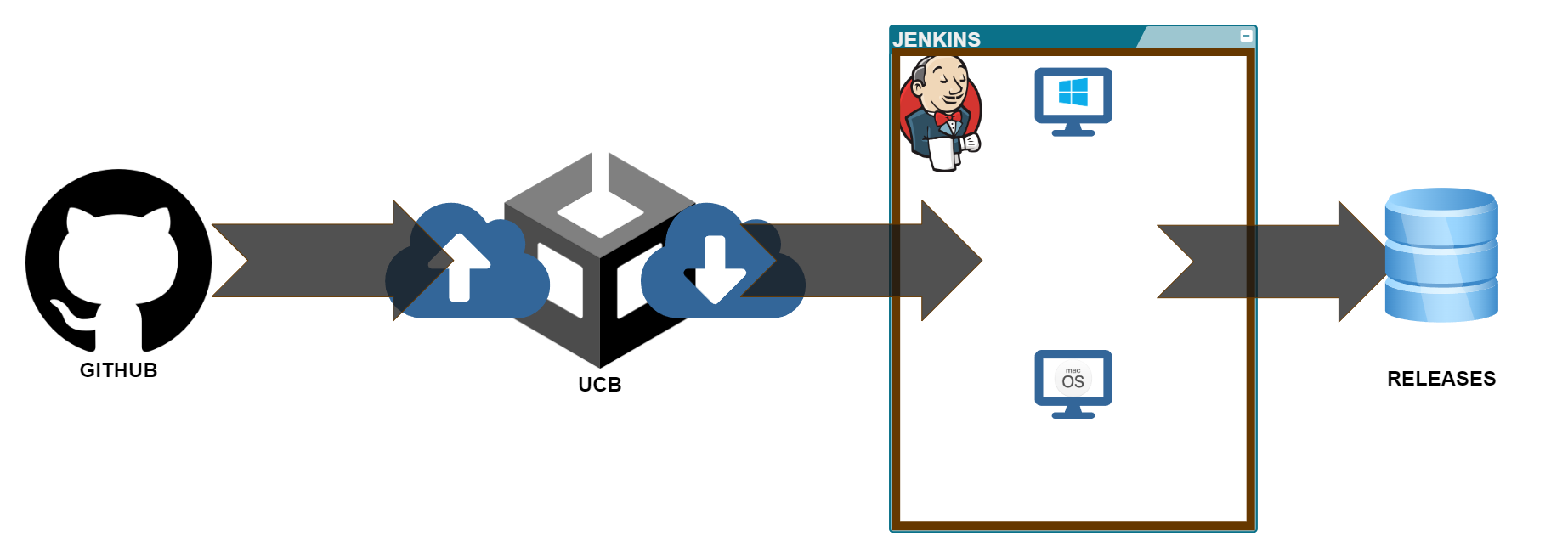

For this reason, in the past year, we moved all of our Unity projects to Unity Cloud Build, the build and storage service of Unity that we were able to implement in our CI/CD cycle thanks to the well-documented API that this service expose.

This honeymoon was short-lived though since recently Unity decided to remove the services of UCB from any kind of subscription and consider it as a completely separate service where you pay as you go.

Being the R&D team of an University of Applied Science we don’t have the economical support of a company, and while we could have still used the service we’d have to reduce drastically the usage to the minimum necessary to keep the cost contained.

For this reason, we decided to extend our internal infrastructure to slowly decommission the services provided by UCB and replicate them internally.

What we had to implement

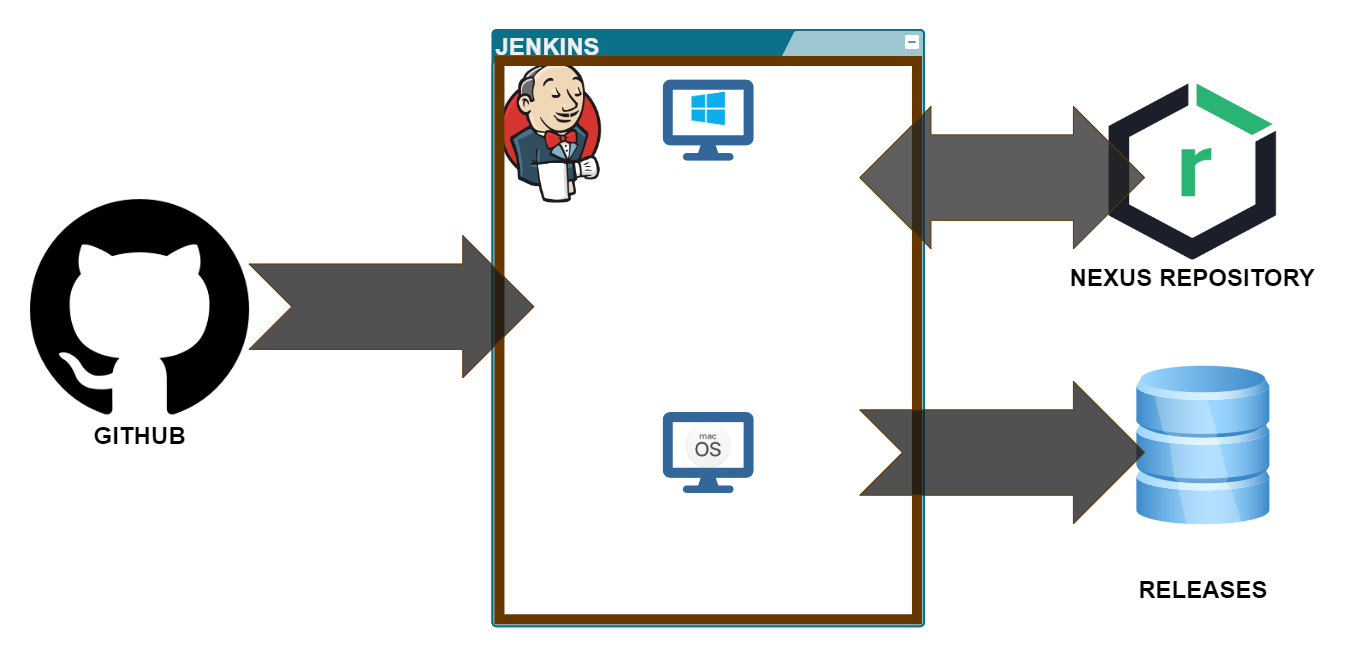

Well, luckily, we already had a Jenkins infrastructure in place that we were previously using with UCB to retrieve the builds and prepare them for release.

This infrastructure already included a Windows and a MacOS node connected to it.

If you’ve never heard about it, Jenkins is an open-source automation server that is mainly used for the automation of building, testing, and deployment of software, that can use different machines (nodes) connected to its central installation.

We then decided to create new jobs to trigger builds at the detection of any change in the codebase on GitHub.

The Jenkins job can be defined inside a Jenkinsfile that must be present inside the code repository. The Jenkinsfile is written in groovy and allowed us to define different behaviors to follow depending on the detection of the branch this job was executed from.

Any new merge on the dev branch for example would trigger the generation of a development build, instead merging on the main branch would generate a normal build for user consumption.

It was fairly easy to launch the build execution from the CLI since Unity allows the user to do so and provide documentation about it. With this in place we were now able to generate the builds, only the matter of their storage was still pending on us.

Since this is an issue that involves the entirety of the IT industry we didn’t have to invent anything.

We needed an Artifact Management System that satisfied our needs: it should cost us nothing and be accessible remotely.

We found what we needed with “Sonatype Nexus Repository Manager“, this service, was built to host build artifacts and offered in its Open Source version a well-documented service of APIs.

After some development and testing time, we were finally able to have automated jobs triggering builds and uploading them to our repositories on Nexus.

We then reworked the jobs that we previously had interacting with UCB to target instead our self-hosted storages on Nexus.

Usability

The entirety of this new infrastructure has come to be because of the needs of one of our specific projects, but, given its usefulness and its capabilities, it would have been a waste of time to keep it limited to that specific project, and being something we created, it’s something we comprehend fully and that we can extend following our needs, instead of being a black box providing us with functionalities in exchange of money.

The first logical step to do would be to extend this to the rest of the Unity projects we’re currently working on, but we need to keep in mind something really important: Cradle is an R&D team composed of different professional figures: Artists, Designers, Project Managers, Researcher, Producers, and Developers.

This means that, even if extensive documentation is provided, not all of us can set up a project to use the new infrastructure given its technical complexity, moreover the setup would become useless if a 2 weeks project would need a 1-2 days setup.

These aspects are often dealt with by an SRE or DevOps team inside large enterprises, but we don’t have this kind of luxury and extensive human resources.

Well, we found out that the solution to this problem created by the technical complexity of the infrastructure lies in the infrastructure itself.

If we were able to automate through Jenkins so much of our development process, why shouldn’t we try to do the same for the setup procedure itself?

And that’s what we did, by creating a job that by taking in input the HTTPS address of a GitHub repository creates the blob store and repositories on Nexus, generates the Jenkinsfile to be used for the project, and creates a branch on the GitHub repository of the project to be merged, containing it.

Reducing to the minimum the input required by the user and reducing to less than 20 minutes the required time to spend on the CI/CD setup of a project.

Maintenance

Our internal infrastructure increased in size quite rapidly, and for this reason we needed to automate a self-check service that let us know as early as possible if anything in the infrastructure doesn’t work anymore.

This is the kind of surprise no one wants to find out on release day, so we put in place another Jenkins job that autonomously runs daily and executes each step of our pipeline at least once: build, upload to Nexus, download to Nexus, installers creation, and release.

Future Developments

The automation of the setup process will be of great help for future projects but it’s still limited to the generation of Windows and MacOS builds, for this reason, we intend to develop it further in the close future, to be able to choose which build target we’d want.

Most of our AR/XR projects use Android builds for example.

Additionally, this infrastructure is not limited to be working with Unity projects but can be used identically for Unreal projects and we’ve already started to use the remote building and storage services with some of our Unreal engine projects.

If you found this knowledge gem interesting and you’d like to know more about the technical implementation you can reach me at: fabrini.j@buas.nl