What is a mocap session?

Motion capture (mocap) has long been a cornerstone of our virtual human development. During a mocap session, we invite a trained actor or actress to our XR stage to record their behaviors, expressions, and movements. Thanks to our in-house Vicon system, we can translate body performance from the real world into a 3D environment. Similarly, for facial capture, we use Metahuman Animator along with a head-mounted rig, allowing us to integrate the recorded data seamlessly into the Metahuman ecosystem.

The MOCAP shoot in progress at the XR Stage, Breda University of Applied Sciences, featuring the actress and the motion capture setup.

The data we glean from these recordings can then be embedded in our virtual humans, making their movements and behaviors authentic and human-like.

What are we recording?

During our most recent mocap session, on the 6th of June 2025, we had the ambitious goal to record the facial and bodily expressions of 23 different emotions. These emotions were carefully selected and profiled using guidance from psychological research and the Facial Action Coding System (FACS).

We decided to go beyond the basics: we expanded our selection of emotions from the six basic ones (happiness, sadness, anger, disgust, surprise and fear) to include more nuanced expressions of contempt, awe, pain, triumph and many more.

Capturing the human soul begins with capturing human emotional complexity!

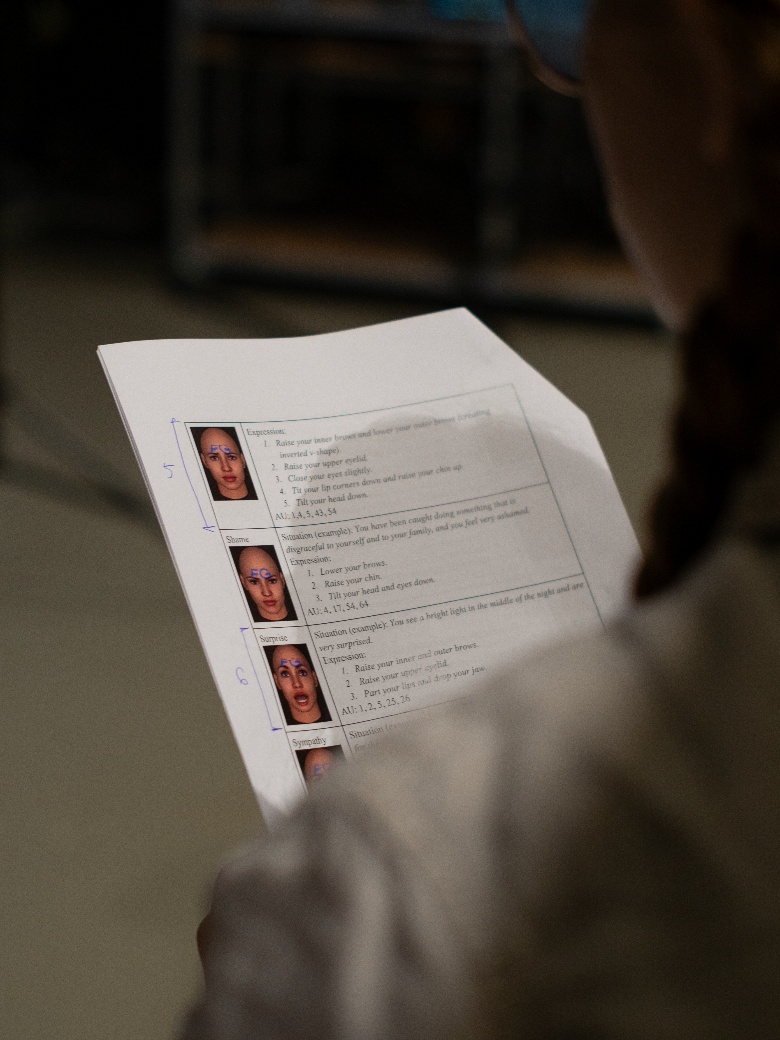

Left: The actress performing an expression of “surprise”, one of the 23 captured emotional expressions. Right: Visual reference of the FACS-based emotional expression guide used

How will these recordings be used?

The recordings from this mocap session will be used to develop a new generation of virtual humans, capable of portraying the complex emotions we recorded. These sophisticated avatars will act as video game characters and even news presenters!

The mocap session will also be a huge steppingstone for the VHESPER Project, which aims to study the psychological impact of virtual humans on our society as they become increasingly integrated into daily life. Animations of emotionally expressive virtual humans will be used as stimuli for future research.

We have bold plans to study how people perceive emotionally expressive virtual humans, assess the authenticity of their expressions and use virtual humans to create expressions that would be impossible for a human to act out – for example, a crying avatar that is nonetheless expressing awe or contentment.

The journey to use virtual humans to better understand how people process emotions is just beginning!

Director: Niels VoskensDirectors on Emotions: Chrysiis Syrri Tsompanopoulou & Kornelia Reinfuss

Producers: Nick van Apeldoorn & Zoltan Batho G

Technical Team: Alexander van Buggenum & Phil de Groot

Actress: Aniek Venhoeven