By using real-time rendering combined with LED walls, ICVFX offers actors an immersive view into the virtual environment and the world of their characters. Experimental productions were set up to uncover whether the actor’s experience can be supported by audio to deliver higher acting quality overall.

Introduction

As virtual production (VP) rapidly matures, in-camera visual effects (ICVFX) continues to redefine how filmmakers approach digital storytelling. Despite offering real-time collaboration, a quick iterative process, and more and more visual fidelity each year, challenges around sound engineering remain a point of contention within the ICVFX pipeline. The curved LED walls, fan noise from rendering computers, and general acoustics of XR stages can lower the quality of audio recordings. As a result, actors end up performing in the sterile, overly technical XR stages with minimal feedback from the environment, depending heavily on their imagination to fill in those gaps. This can impact an actor’s consistency in delivery, emotional and physical responsiveness, and narrative immersion within the scene. Long production days are not only an inefficient and costly endeavour, but they also tire the actor’s imagination. Therefore, by introducing real-time audio that supports the actor’s performance, they will need fewer takes to perform scenes effectively. The real-time audio completes the soundscape of the virtual environment, resembling more closely traditional film sets. This can then lessen the cognitive load on actors during production and improve overall acting quality. This gem will uncover: How can diegetic real-time audio improve actor performance in virtual production without compromising technical integrity?

Experimental Productions

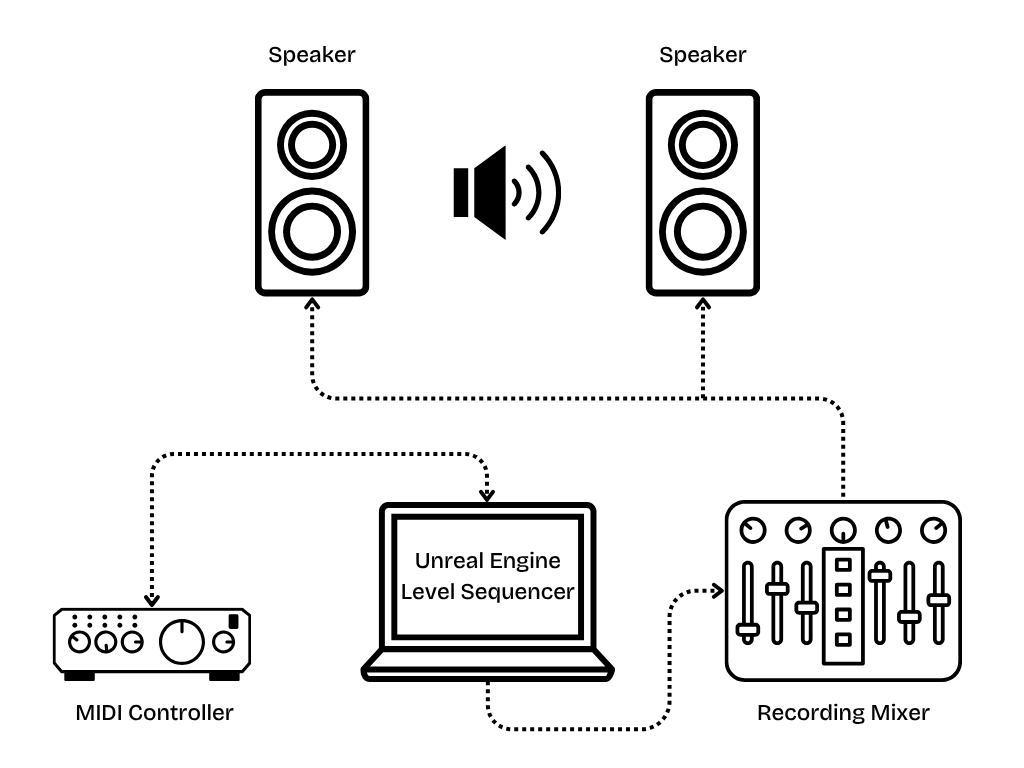

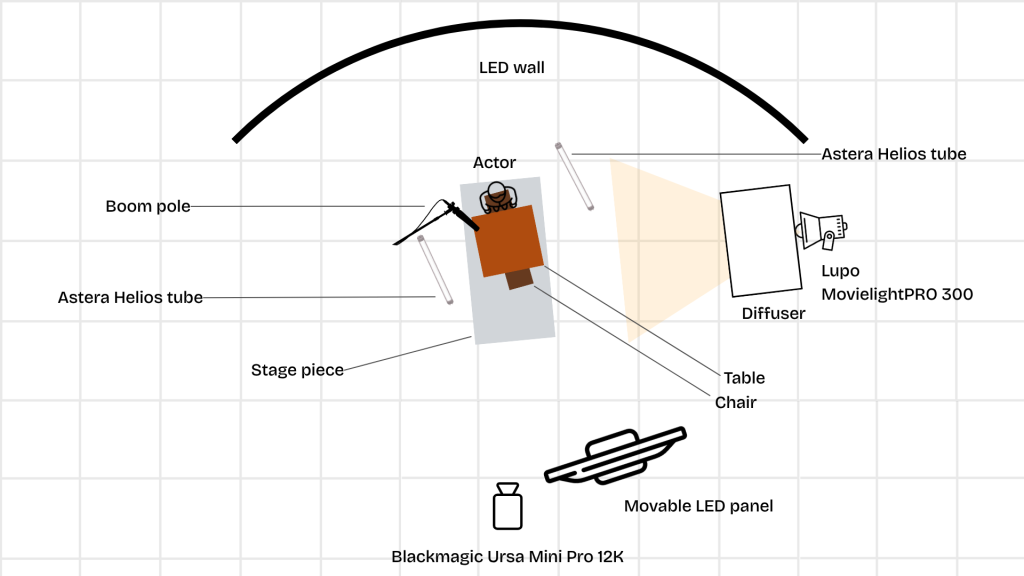

A series of 4 ICVFX productions were held to see how actors perform with and without diegetic real-time audio. The experimental approach involved actors performing a monologue in a typical ICVFX setup. Where it differed was the addition of a MIDI board, a recording mixer, and 2 speakers used for the audio playback. The diegetic real-time audio integration was done through the Unreal Engine’s sequencer, where the same simulated real-world physics that enhance asset creation was extended to the audio elements as well. The sound system was made to feel real without obstructing filming. Two scenes were recorded for each actor: one with no audio and one with the diegetic real-time audio playing live during filming. These context-specific sounds were chosen to fit the bistro scene and included murmuring, footsteps, creaking floors, plates, cutlery, and glasses clinking together – a symphony of a busy restaurant!

Results

The results pointed to a shift in the actors’ approach to the scene when diegetic real-time audio was implemented. Actors used the audio playback as a motivational anchor, allowing them to embody the characters with greater harmony between their emotional state and physical expression, where their actions and feelings align naturally with the character they’re portraying. Participants reported heightened immersion, quicker transitions into character, and more grounded performances. The real-time soundscape reduced cognitive load that performers often deal with in an overly digital environment; instead of imagining what the virtual environment they’re performing in sounds like, actors could directly respond to it, making their reactions more organic and instinctive. When combined with acting techniques, the diegetic real-time audio served as a tool that helped actors align their choices with the context of the virtual world around them.

However, the research also exposed potential risks and limitations for industry professionals looking to upscale. While the experimental production was successful in a controlled environment, scaling such a workflow to full-length productions could introduce several challenges. In larger productions, real-time audio might interfere with dialogue recording clarity or introduce latency issues. The effectiveness of the system depends heavily on strategic speaker placement and playback quality. More elaborate scenes would require greater customisation, inevitably increasing setup time and demanding closer coordination across departments. The success of such systems depends on the audio team’s involvement already from the previs onward. This ensures audio assets are built into the environment design as early as other technical elements. Without this shift, real-time audio reduces its positive impact on actor performances.

Recommendations

This gem reframes audio not as a production nuisance, but as an opportunity to better actor performances within the ICVFX pipeline. Moving forward, expanding the role of diegetic real-time audio will likely require more collaborative planning and possibly new roles that bridge the gap between audio engineers, VP technicians, and virtual art departments. For professionals working in the VP industry, these findings point toward a growing need to rethink how sound is positioned within the overall workflow. While visual fidelity has understandably led much of the development in ICVFX, immersive storytelling depends equally on believable soundscapes. Recognising the actor’s perspective within these environments highlights an opportunity to better support performance by extending the shared frame of reference of the diegetic space beyond visuals alone. As virtual production continues to gain traction across the film industry, practices that enhance authenticity and reduce technical barriers for performers will be crucial. The potential payoff is substantial: more authentic performances, reduced production costs, and a more seamless blend of real and digital worlds.