Introduction

Interactive experiences increasingly rely on believable digital actors. Whether they appear as game characters, or news presenters, virtual humans must convey authentic emotional depth to earn user trust and immersion. This post distils the R&D we have carried out at Breda University of Applied Sciences (BUas) to push beyond conventional motion-capture practices and deliver avatars that feel alive.

Our goal was clear:

- Capture a broad, nuanced palette of human emotion.

- Integrate physiological details such as crying and hyperventilation that sell the illusion of life.

- Make everything runtime-ready, loopable, and context-aware inside Unreal Engine’s Metahuman framework, so the same data powers cinematic sequences and real-time interactions.

Capturing the Human Palette of Emotions:

On 6 June 2025, we invited a professional actress to the XR Stage to record 23 distinct emotions. Our set extends the classic Ekman six (happiness, sadness, anger, disgust, surprise, fear) with nuanced affects such as awe, contempt, triumph, pain, relief, interest, and more.

Each emotion was performed in:

- Full-body pose with characteristic gesture and posture.

- Close-range facial performance to capture subtle muscle activations.

All performances went through a post-processing step to implement matching start and end key poses, ensuring they can play indefinitely without visible pops—a vital requirement for in-game idle states.

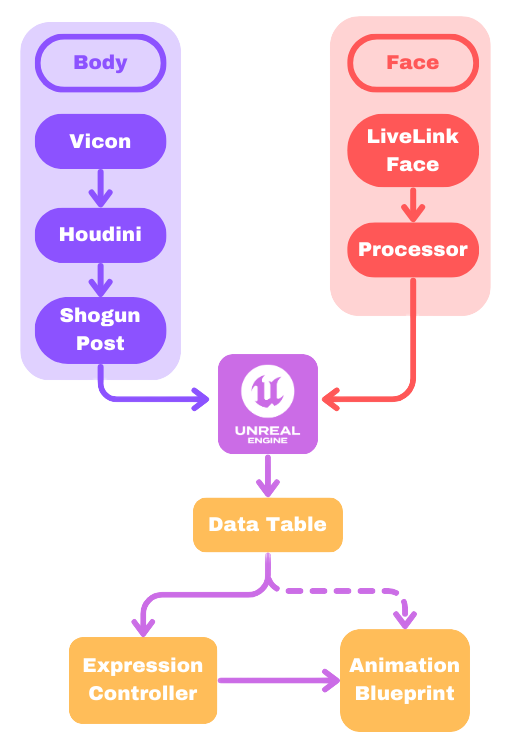

Pipeline overview:

More about the motion capture: Link

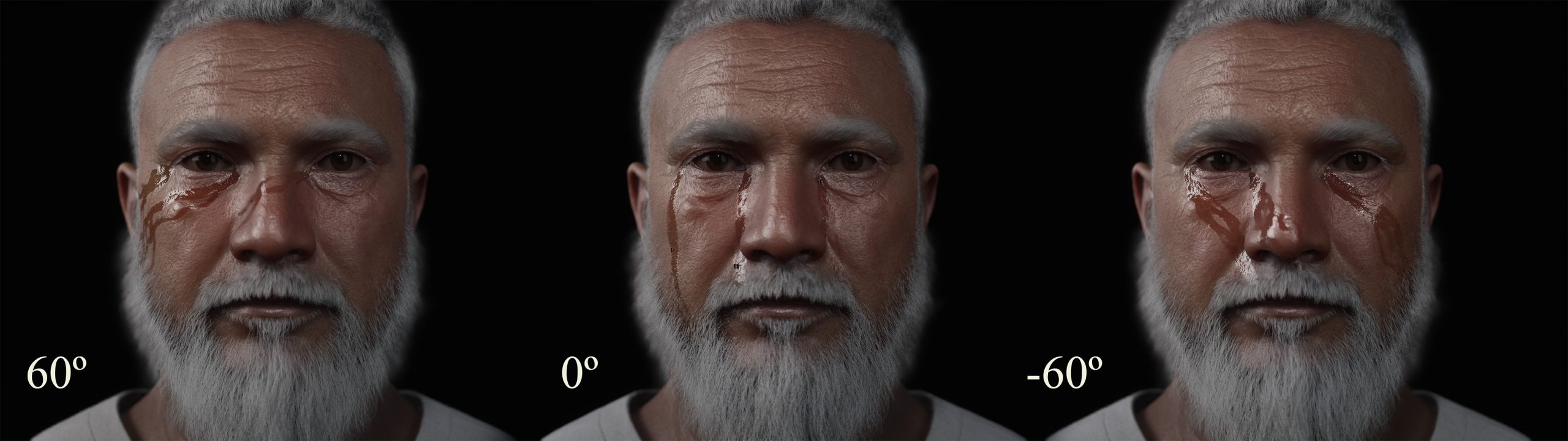

Crying System – Reactive Tears Driven by Performance

Authentic sadness is more than a facial pose, it involves tears and subtle nuances like breathing. Our crying system augments captured animation with:

- A dynamic particle system reactive to physics.

- A material wetness shader that propagates along a timed gradient down the cheeks.

- Blueprint exposure of cry intensity (0–1), allowing designers or AI logic to ramp crying on or off in real time.

Because the tears are reactive, they borrow motion from facial expression and gravity, driven by the captured performance rather than a canned VFX track.

Hyperventilation – Procedural Breathing Layer

Stress, panic, or even ecstatic surprise often trigger rapid, irregular breathing. We captured inhale and exhale key poses with the Control Rig, then looped them in sequence, using procedural techniques to modulate the amplitude and tempo of the motion.

This system accepts a single scalar breathing intensity parameter for easy gameplay linking.

Because the graph is additive, it stacks on top of any base animation, be it an idle emotion in a cut-scene or a real-time conversational gesture, without destroying the underlying motion. The same layer plays back flawlessly in Level Sequence for offline cinematics and in gameplay situations, such as chatbot-driven avatars.

Conclusion – Reactive Avatars

By fusing loopable emotion captures, additive physiological layers, and a parameter-driven animation state machine, this system now enables reactive Metahuman avatars. External services like conversational AI, voice-stress analyzer, or bespoke gameplay script, can now set high-level variables like emotion state, cry intensity, and breathing intensity at runtime.

The character immediately responds with the correct facial expression, body language, tears, and breathing pattern, allowing chatbots and other interactive systems to project genuine emotional intelligence.